The Artificial Intelligence Investable Ecosystem (AIIE)

Think of artificial intelligence like electricity in the 1900s. Back then, investors didn't just bet on light bulb companies. They invested in power plants, copper mines, and electrical equipment makers. Today's AI revolution works the same way. There's a whole ecosystem of companies making AI possible, and understanding this map can help you see where the money is really flowing.

The Story Behind the AI Boom

When ChatGPT launched in late 2022, over 100 million people tried it within two months. That moment changed investing forever. Suddenly, AI wasn't science fiction. It was something your grandma could use to write emails.

Since then, money has poured into AI investments like water through a broken dam. AI-focused investment funds have grown fourteen times larger in just two years. That's not a typo: 14x growth. By mid-2025, over $30 billion sat in funds specifically hunting for AI opportunities.

But here's the tricky part: Which companies actually count as "AI companies"? Is it just the ones building ChatGPT-style chatbots? What about the companies making the computer chips that power AI? Or the electric companies keeping those massive data centers running?

The answer is: all of them. AI is like a chain, and every link matters.

Layer 1: The Foundation Model Providers

What they do: These companies create the foundational models, which are the AI "brains" trained on massive datasets. They build the actual software that can write essays, generate images, or answer questions.

While each of these model providers will find their own unique advantages serving both B2C (business-to-consumer) and B2B (business-to-business) customers, make no mistake: they are all competing fiercely for consumer market share. These companies are regularly checking their daily active users (DAUs) and monitoring their rankings on the App Store and Google Play Store charts. Consumer adoption drives everything in AI, from brand perception to enterprise sales.

The Giants Everyone Knows

Google (GOOGL): The Sleeping Giant That Could Dominate Everything

Here's what most investors miss: while everyone watches ChatGPT, Google might be building the most dominant AI position of all time.

Google invented the Transformer architecture in 2017, the core technology behind every modern AI. Their DeepMind team won the 2024 Nobel Prize in Chemistry for AlphaFold, used by over 2 million researchers globally to accelerate drug discovery and disease research.

The real story is scale. Google's AI Overviews reach 2 billion monthly users. Gemini has 450 million monthly active users. AI Overviews now appear in over 50% of all searches. Google processes 13 billion searches daily, giving them a data advantage no competitor can match.

Leading DeepMind is Demis Hassabis, who believes we're on track to reach AGI (artificial general intelligence) in five to ten years. "If everything goes well, we should be in an era of radical abundance, a kind of golden era," he told Wired. He talks about curing all diseases and solving civilization-level problems with AI.

Google has the computing power, the distribution (Search, YouTube, Android, Chrome), and the talent. They don't just have one path to dominance. They have many.

Microsoft (MSFT) teamed up with OpenAI (the creators of ChatGPT) and basically won the early race. They put AI into Word, Excel, and PowerPoint, making every office worker in the world a potential AI user. Their Azure cloud service became the default choice for companies wanting to add AI to their products.

Meta (META) took a different path. Instead of keeping their AI secret, they released LLaMA for free, like giving away the recipe for Coca-Cola. Why? They want everyone building AI to use their technology, making Meta the standard everyone depends on.

Amazon (AMZN) plays a different game. While they offer foundation models through AWS Bedrock, their real strength is being the infrastructure provider. AWS rents out AI computing power and hosts other companies' models, positioning Amazon as the enabler rather than the star performer.

Apple (AAPL): The Patient Giant Waiting to Strike

Apple is noticeably late to AI, and that might be exactly their strategy. Reports surfaced in August 2025 that Apple approached Google about using Gemini to power a revamped Siri, with Google training a custom model for Apple's servers. Apple is testing multiple providers internally, evaluating who can deliver at the scale only a few companies possess.

Here's what makes Apple dangerous: they own the hardware. Over 2 billion active devices. When Apple decides the moment is right, they don't need downloads or website visits. They push an update and instantly control the largest AI distribution platform on Earth. The revamped Siri launches spring 2026. Apple could go from AI laggard to dominant player overnight, not by building the best model, but by controlling the most valuable consumer distribution channel in technology.

The Comeback Kids

IBM (IBM) went from "Watson wins Jeopardy!" headlines to quietly becoming the trusted AI provider for banks, hospitals, and governments. These are industries where you can't afford mistakes.

Baidu (BIDU) leads AI in China with their ERNIE models, embedded in everything from search to self-driving cars.

Notable Private Players

OpenAI (backed by Microsoft) created ChatGPT and remains the consumer market leader, despite being private. Their GPT models set the standard everyone else chases.

Anthropic (backed by Amazon and Google) built Claude, focusing on AI safety and competing directly in the consumer chatbot space.

xAI (Elon Musk's venture) launched Grok, integrated with X (formerly Twitter), aiming to leverage social media data for training and distribution.

DeepSeek shocked the market in early 2025 by releasing highly capable models at a fraction of the training cost, proving that Chinese AI labs could compete on efficiency and innovation, not just scale.

Layer 2: The Cloud Infrastructure Platforms

What they do: These companies rent out the massive computing infrastructure needed to run AI workloads.

Think of AI like a restaurant. The brain builders (above) create the recipes. But you need a kitchen to actually cook. That's what cloud companies provide.

Amazon AWS, Microsoft Azure, and Google Cloud dominate this space. They're building data centers the size of small towns, filled with powerful computers that anyone can rent by the hour.

It's like Airbnb, but for supercomputers. A startup in a garage can rent the same computing power as a Fortune 500 company. They just pay for what they use.

Oracle (ORCL) and Alibaba Cloud (BABA) serve more specialized markets: Oracle for big businesses running old-school databases, Alibaba for Asia's booming tech scene.

Layer 3: The Semiconductor & Hardware Layer

What they do: They design and manufacture the physical computing hardware and chips (called GPUs and accelerators) that make AI training and inference possible.

This is where things get interesting for investors.

Nvidia: The 800-Pound Gorilla

Nvidia (NVDA) controls 92% of the AI accelerator market. Yes, 92%. They didn't plan this. They were making GPUs (graphics processing units) for video games. But it turned out those gaming chips were perfect for training neural networks, the mathematical systems that power AI.

But here's what most people miss: Nvidia isn't just selling chips anymore. They're building complete AI infrastructure systems. They design the full stack, from GPUs to networking to software, and they'll even help customers install systems using other companies' chips. CEO Jensen Huang famously stated that Nvidia's total cost of ownership (TCO) is so good that "even when the competitor's chips are free, it's not cheap enough."

That's because Nvidia focuses on factors like time to deployment, performance, utilization, and flexibility across the entire system. They're now building what they call "AI factories," complete reference designs that integrate power, cooling, networking, and compute as a unified whole. When you buy from Nvidia, you're not just buying a chip. You're buying into an entire ecosystem.

This is why customers keep coming back, even at premium prices. Huang points out that Nvidia even helps customers who are designing competing AI processors, going as far as showing them what upcoming Nvidia chips are on the roadmap. It sounds counterintuitive, but it's smart business. They're so confident in their full-stack advantage that they can afford to be transparent.

Now every tech company is desperate to buy Nvidia chips. Imagine if only one company made car engines, and everyone from Toyota to Tesla had to buy from them. That's Nvidia's position.

Nvidia appears in 90% of AI investment funds. That's more than any other company. They've become impossible to ignore.

The Challengers

AMD (AMD) is trying to break Nvidia's monopoly with their MI300 chips. They're winning some big customers, but it's like trying to beat Apple with a new smartphone. Possible, but really hard.

Intel (INTC) is fighting to stay relevant after missing the AI boom. The U.S. government awarded Intel $7.86 billion in CHIPS Act funding plus a $3 billion defense contract, making them one of the largest recipients of government semiconductor support as America rebuilds domestic chip manufacturing. Despite this backing, Intel is racing to catch up with their Gaudi accelerators and a foundry pivot.

Broadcom (AVGO) makes the networking equipment that connects all these AI computers together. Think of them like the veins in a body.

The Enablers

TSMC (TSM) might be the most important company you've never heard of. They're a semiconductor foundry. They manufacture chips designed by others using advanced process nodes (like 3nm and 5nm technology). Nearly every major AI chip is fabricated in their facilities in Taiwan. No TSMC, no AI chips. Period.

ASML (ASML) makes the machines that TSMC uses. They have a complete monopoly on the most advanced chip-making equipment. Each machine costs over $300 million. There's literally no backup option if ASML has problems.

Micron (MU), Samsung, and SK Hynix make the specialized high-bandwidth memory (HBM) that AI accelerators need. Without enough memory bandwidth, even the fastest chip is useless. It's like having a race car with a tiny gas tank.

Dell (DELL) and HPE (HPE) assemble everything into the actual servers that companies buy and install.

Layer 4: The Data Management & Preparation Platforms

What they do: They organize, clean, and prepare datasets so AI models can actually use them for training and inference.

Here's a secret: AI is only as smart as the data you feed it. Garbage in, garbage out. These companies handle data engineering, which means cleaning, organizing, and serving up data in formats AI can digest.

Snowflake (SNOW) became the cool kid by making it easy to store and analyze massive amounts of data in one place. Companies dump all their information into Snowflake, and AI can then access it instantly.

Oracle (ORCL) still runs the databases for most of the world's biggest companies. They're embedding AI into those ancient (but critical) systems.

Layer 5: The Vertical AI Application Layer

What they do: Deploy AI models into specific industries or use cases, creating purpose-built solutions.

While the big players build general-purpose AI, these companies focus on vertical integration, meaning they specialize in particular industries:

Palantir: The Enterprise AI Execution Machine

Palantir (PLTR) deserves special attention because they're proving something critical: they can make AI actually work at enterprise scale.

MIT's latest report says 95% of enterprise AI pilots fail. Most companies bolt on a shiny model, call it a strategy, and wonder why nothing changes. Palantir's Artificial Intelligence Platform (AIP) tells a different story entirely.

The results speak for themselves. Walgreens scaled their AI deployment from 10 stores to 4,000 stores in eight months. Sompo Japan added $60 million in profit with another $100 million expected. General Mills is saving $14 million annually. Fujitsu cut $9 million in costs in just three months. United Airlines avoided 300 delays and 20 cancellations. Heineken compressed a three-year software build down to three months.

These aren't pilot projects or proof of concepts. These are production deployments generating real cash flow improvements.

What Palantir figured out is that the hard part isn't having access to powerful AI models. The hard part is integrating those models into complex enterprise systems, connecting them to messy real-world data, training employees to use them effectively, and proving ROI to skeptical executives. AIP handles the entire workflow: from connecting data sources to building AI-powered applications to deploying them across an organization.

CEO Alex Karp has said he's not focused on winning, he's focused on dominating. The company evolved from a secretive government contractor (still doing critical work for defense and intelligence) into the leading enterprise AI deployment platform. When you look at their customer results, you understand exactly what Karp meant by "dominating."

In a world where most enterprise AI initiatives quietly fail and get written off, Palantir is one of the few companies consistently showing they can turn AI pilots into production systems that transform how businesses operate.

Other Vertical Specialists

BigBear.ai (BBAI) analyzes intelligence data for the military and government. Think: using AI to spot threats before they happen.

SoundHound (SOUN) powers voice AI in cars and devices. Every time you talk to your car's AI assistant, there's a good chance it's running on SoundHound technology.

C3.ai (AI) sells AI software suites to specific industries like oil, gas, and manufacturing. They're trying to be the "AI for everything" company outside of tech.

Innodata (INOD) does the unglamorous but essential work: preparing training data that tech giants use to teach their AI. Somebody has to label millions of images and text. That's Innodata.

Tesla: The Autonomous Future (or Is It?)

Tesla (TSLA) represents the highest-stakes bet on AI in the physical world: autonomous vehicles and robotaxis.

Tesla has been promising Full Self-Driving (FSD) for years. CEO Elon Musk has repeatedly predicted full autonomy would arrive within "one to three years" since 2013. Those timelines have never been met. As of September 2025, Tesla is rolling out FSD v14, which Musk claims will make the car "feel almost like it is sentient." But the system still requires human supervision and remains categorized as Level 2 automation, far from true autonomy.

The company's robotaxi ambitions are even bolder. Tesla launched a limited unsupervised robotaxi service in Austin, Texas in 2025, with plans to expand to California. The company has accumulated over 50,000 autonomous miles in its Texas and California factories, using unsupervised FSD to move vehicles from production lines to loading docks. But federal regulators remain skeptical. NHTSA is actively investigating FSD's safety record and has pressed Tesla for details on how its robotaxi service will differ from the supervised FSD currently under scrutiny.

Here's the investment case: Tesla designs its own FSD chips and operates Dojo, its custom AI training supercomputer (though Bloomberg reported Tesla shut down the Dojo project in August 2025). Every Tesla on the road collects real-world driving data, creating a massive training dataset competitors can't match. If Tesla achieves true autonomy, it unlocks a robotaxi network using millions of existing vehicles. Owners could loan out their cars when not in use, and Tesla takes a cut of every ride.

The skeptical view: After over a decade and billions invested, Tesla still hasn't delivered on autonomy promises. Competitors like Waymo already operate truly driverless robotaxis in multiple cities using different technology (lidar sensors vs. Tesla's camera-only approach). Safety concerns persist, with fatal crashes involving FSD making headlines. And Musk's timelines have proven consistently unreliable.

Tesla is either building the future of transportation or burning capital chasing an impossible goal. There's very little middle ground.

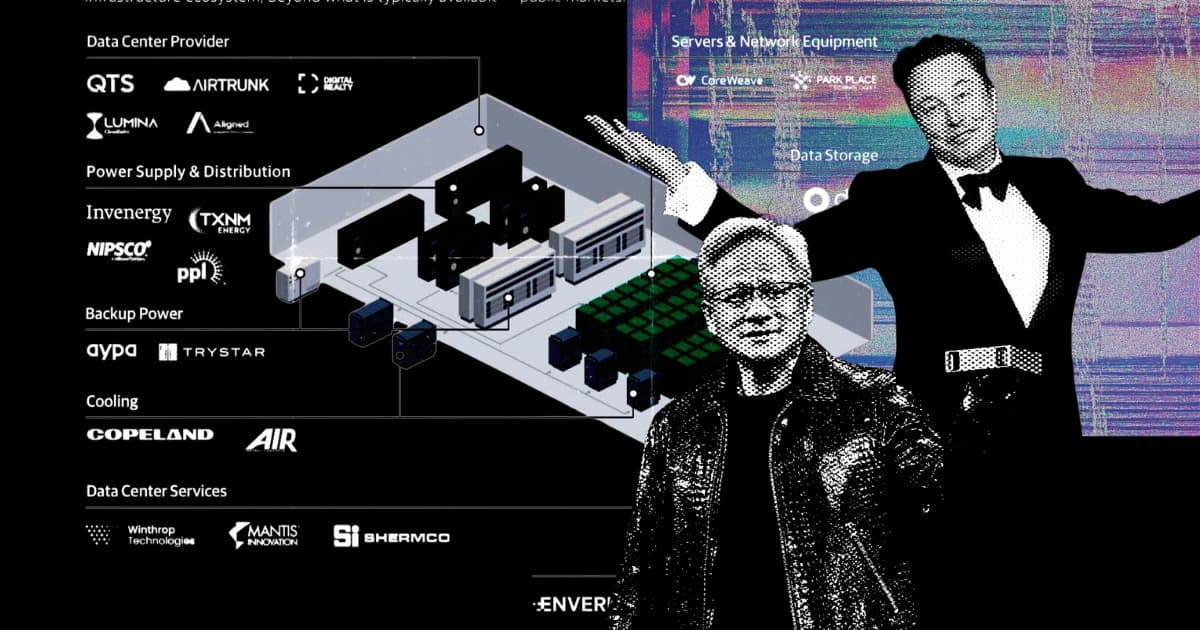

Layer 6: The Network Infrastructure & Data Center Layer

What they do: Build and operate the physical facilities and network connectivity that house AI compute clusters.

AI servers generate incredible heat and require high power density. You can't just stick them in a normal office building. They need specialized facilities with advanced cooling and redundant power systems.

Equinix (EQIX) and Digital Realty (DLR) own data centers worldwide and are racing to upgrade them for AI's extreme power needs.

Arista Networks (ANET) supplies the ultra-fast networking switches (400Gbps Ethernet and beyond) that let thousands of AI accelerators communicate in parallel. In AI training clusters, chips need low-latency, high-bandwidth connections. Arista makes that possible.

Cisco (CSCO) is the old networking giant trying to stay relevant by focusing on security and monitoring for AI systems.

Layer 7: The Energy & Power Infrastructure Layer

What they do: Provide the electrical power and thermal management systems that keep AI data centers running.

Here's a shocking fact: Training a single large language model (LLM) can use as much electricity as 100 homes use in a year. As AI scales, energy demand is exploding. Data centers now need gigawatts of power capacity.

NextEra (NEE), Duke (DUK), and Dominion (D) are utility companies racing to build enough power capacity for new data centers.

Vertiv (VRT) became a surprise AI winner by making the liquid cooling and power distribution systems that keep GPU servers from overheating. AI accelerators run so hot they need direct liquid cooling, similar to a car radiator, but for compute hardware.

AES (AES) and Fluence (FLNC) provide battery storage, so AI data centers can keep running even when the power grid fails.

Layer 8: The GPU Cloud Service Providers

What they do: Provide on-demand access to GPU compute resources, competing with hyperscale cloud platforms.

Not everyone wants to rent from Amazon or Microsoft. These companies are filling the gap:

CoreWeave (CRWV) started as a cryptocurrency miner, then pivoted to offering GPU-as-a-service for AI workloads. They signed multi-billion-dollar contracts with OpenAI and became a legitimate alternative to hyperscale clouds.

Nebius (NBIS) emerged from Russia's Yandex and signed a $19 billion deal with Microsoft. They're betting that customers want options beyond the usual suspects.

Lambda Labs (still private) focuses on AI developers and researchers who want simpler, cost-effective access to GPU infrastructure for model training and inference.

The Big Picture: Understanding the AI Investment Map

Here's what savvy investors understand: AI isn't one thing. It's a technology stack. Layer upon layer of different technologies and companies working together.

Foundation Model Providers need Cloud Infrastructure Platforms

Cloud Platforms need Semiconductor & Hardware manufacturers

Hardware makers need Foundries and Memory suppliers

Everyone needs Data Management Platforms to handle datasets

All of it needs Network Infrastructure, Data Centers, and Power systems

And Vertical Application providers take the technology and deploy it to solve real-world problems

When you invest in AI, you're really asking: which layer do I believe in? Do you bet on Nvidia maintaining its chip dominance? On Microsoft winning the enterprise AI race? On power companies profiting from exploding electricity demand?

There's no single right answer. But understanding how these pieces fit together helps you make smarter choices.

What Does This Mean for Investors?

Three key takeaways:

The Magnificent Seven Dominate: Microsoft, Google, Amazon, Meta, Apple, Nvidia, and Tesla appear in nearly every AI fund. They're unavoidable, which makes AI funds sometimes feel like expensive versions of regular tech funds.

US Companies Lead: Despite Europe having more AI investment funds, US companies dominate the actual holdings. Nearly every major AI stock is American. If you're investing in AI, you're mostly betting on America.

High Reward, High Risk: AI funds have beaten regular stock indexes by huge margins since 2022. But they're also more volatile. They go up bigger and down harder. This isn't a safe, steady investment.

The Bottom Line

The AI revolution isn't just about ChatGPT or self-driving cars. It's about a complete reshaping of how we compute, store data, use energy, and build applications.

From the chips in Taiwan to the power plants in Texas, from Microsoft's partnership with OpenAI to tiny specialists building voice AI, every piece of this ecosystem plays a role.

Understanding this map helps you see past the hype and understand where real value is being created. Because in the end, successful investing isn't about chasing the hottest stock. It's about understanding the story behind the companies and making informed bets on which parts of that story will win.

The AI Gold Rush has begun. Now you know where to look for gold.

What did you think of this article?

Let the author know your thoughts

Investment Disclaimer

The information provided in this article is for educational and informational purposes only and should not be construed as financial, investment, or professional advice. You may lose all of your money when investing. All investments carry substantial risk, including the potential for complete loss of principal. Past performance does not guarantee future results. You must conduct your own research and due diligence, including independently verifying all facts, numbers, and details provided in this article. Please consult with a qualified financial advisor before making any investment decisions.